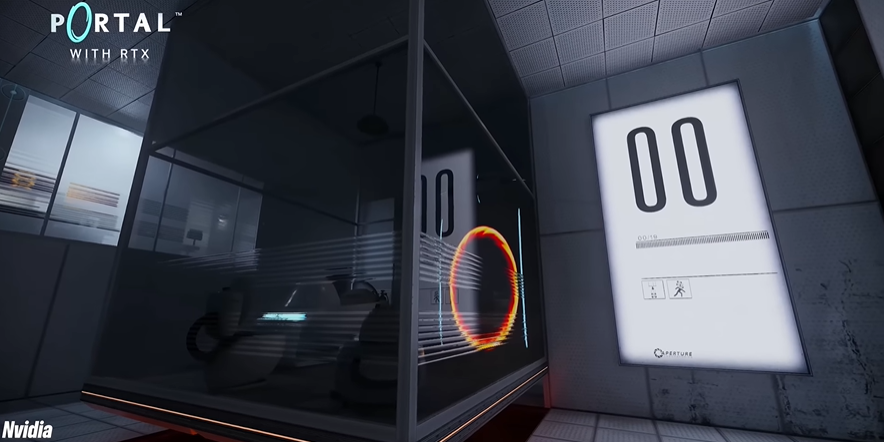

How much is acceptable? And let’s pause for a second because Nvidia unveiled the RTX 1490 and a couple of 4080s a few days ago, and there’s some exciting stuff. We’ve got a brand new architecture, Ada Lovelace, uh, we’ve got DRSS3 and video throwing out some crazy numbers between two and four times increases in performance, and, of course, we have Portal RTX.

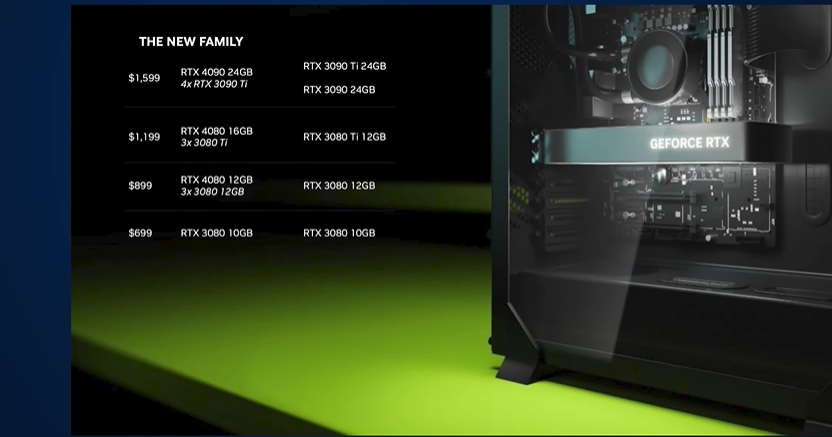

So I had a lot of questions, so many that I And those are some scary numbers, and it’s even worse outside of the US. Many countries here are more expensive but remember we include our vat sales tax in the price, whereas they don’t in the US. Let’s start with the cheapest 4080, the 12 gig version, which is launching at $200 more than the 3080 launched a few years ago, which isn’t ideal. Now Nvidia is saying, and perhaps fairly, that you’re getting roughly 30 to 90 TI high because they still have a lot of 3000 Series to sell. The 3070, the 3060, are all still on sale and, incredibly, the 3080 is still the same price that it was when it launched a couple of years ago. However, the 329 RTX 3060 still represents excellent value for money in terms of new cards. I think the higher end 4080, the 16 GB version, is too expensive and I think they’ve done that purposely, so it’s not much of a leap to go for the much more powerful 4090.

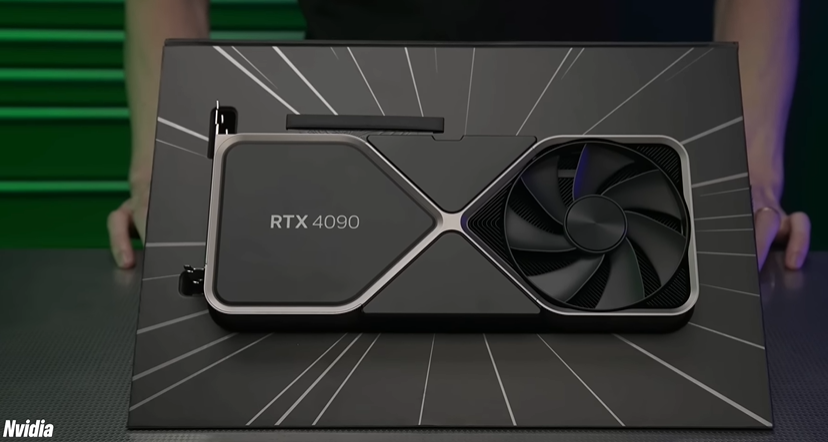

In raw spec terms, the 4090 looks like a much better proposition, and you know what, I actually don’t have that much of a problem with a sixteen hundred forty-ninety dollar boost considering we are probably going to get a minimum 50 boost in even rasterized performance over the 3090 TI. There was a two-grand up until recently. I don’t think that’s too unreasonable if you are going for the crazy high-end 4K k120s ak-60 setups or just want the best graphics options with Ray tracing turned on. Although that 1600 is for the Founders card, I have no doubt we’ll be pushing and even exceeding the 2 000 mark with some third-party boards with crazy triple fan setups very soon afterwards, although not from EVGA. I’ll tell you what does bother me, though. The 4080s The two different cards Because since the beginning of time, the only difference between the two has been the vram, which usually means if you are playing at a high resolution. If you’re at 4K, you may want the higher vram, but these are significantly different cards. The 16-gig 4080 gets 27 more Cuda cores, a wider memory bus, and 38 and 22 faster RT and tensor core performance, respectively.

To be honest, the name is far too misleading for someone to go out and buy the 4080. You might think, “Oh I can get the 12 gig version for so much cheaper, for a third less than the 16 gig version. That sounds like a much better deal, but you’re getting probably 20 to 40 percent less performance. I mean, we’ll have to wait for the reviews and the benchmarks I believe the base 4080 should have been called the 4070, and I’m sure there are plenty of marketing reasons why they haven’t, including the fact that there will be a 4070 next year and the fact that they don’t want people freaking out that there’s a 900.4070, but really, it’s okay, let me just calm down for a second, because there is a lot to be excited about with these new 4000 series cards.

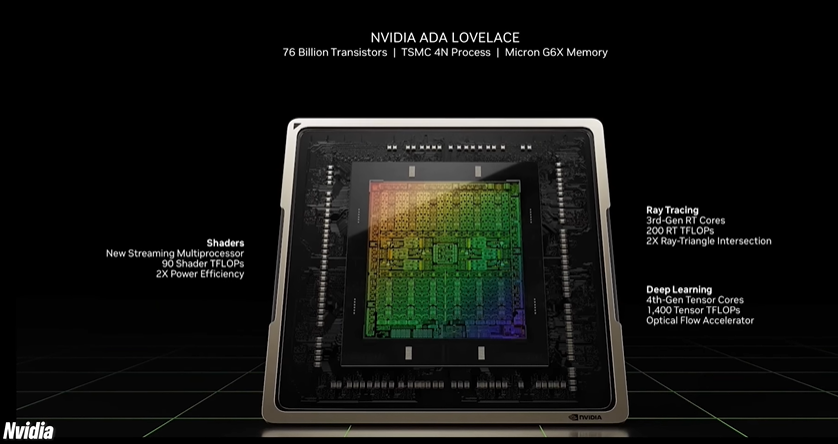

Although it isn’t about saving power, the 4090 can hit a humongous 660-watt boosted TGP. There’s also lower power memory, and the RT and tensor cores have all been bumped up a generation, although it’s hard to know exactly what that means without testing.

So crucially, RT performance is way better and more efficient, which is why Nvidia has made this racer RTX demo, which is fully raided. The new RTX 4000 series cards feature dual AV1 encoders that, combined with the NVLink encoder, allowing you to stream 1440p60 gameplay at a much higher quality. Nvidia says this could speed up my 4K h.264 exports by 55, which is something I’ll be testing myself. In terms of design, only the 16-gigabyte founders’ editions of the 4080 and 1490 have been redesigned, with 20 percent more airflow, new heat Crucially, most importantly of all, there’s a new font.

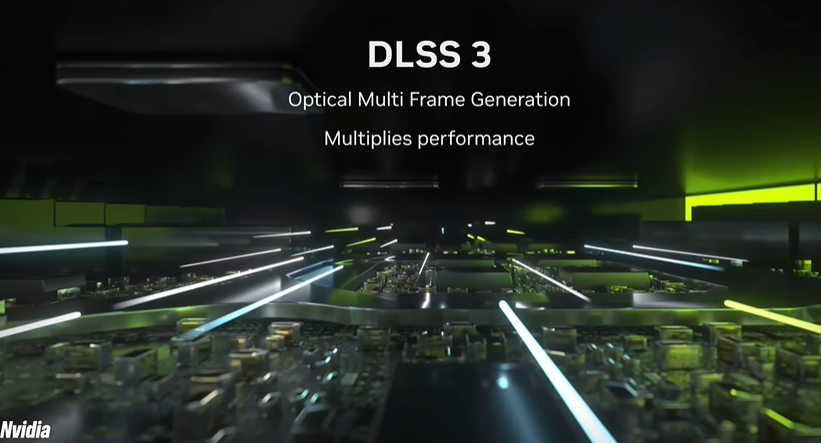

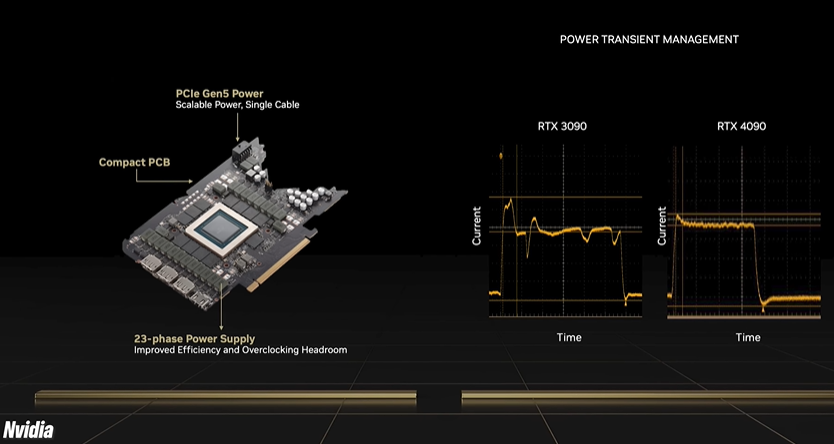

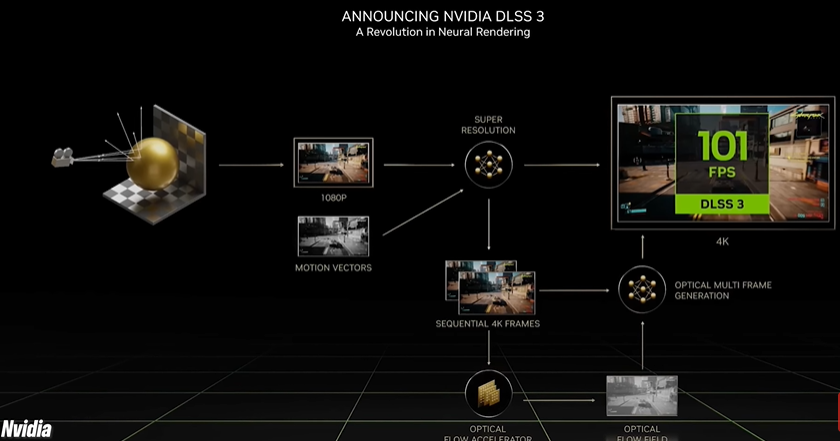

All three cards use a new pcie5 power cable which allows up to 660 watts, meaning you can run a 4090 using a single cable The problem is you’ll need a PCI 5 compatible PSU, which is only just coming on the market with a handful arriving in October, otherwise you’ll need to use the bundled adapter now. Whether you like or dislike Nvidia, I think we can all agree that DLS is pretty magical, and probably the biggest news for me was the LSS 3 and its clever new frame generation technology. So DLSS one, two, and 2.1 all use AI-trained tensor cores to upscale a high-resolution image. dlss3 can now also use the upcoming two frames to compute a third frame, which can be inserted between them to boost your frame rate.

In fact, DLSS3 actually renders 7 out of every 8 pixels, which is why this can double frame rates rather than just add a third more. And depending on how it’s implemented, we’re looking at up to two times the performance in rasterized games and four times in our t-enabled games. This isn’t just your regular old frame interpolation technology either. This is using the optical flow technique. So, based on what the in-game camera looks at and the in-game objects, it’ll generate new frames. It’s very clever stuff. So in Nvidia’s example, this increases the frame rate in Cyberpunk at 4K with RT from the low 20s up to 90 or even over 100 FPS, and from the mid-50s to over a hundred in Flight Simulator. It’s interesting stuff, but we’ll have to wait and see if any smoke and mirrors are going on until I get my review samples here. I can test them myself properly. The DLS has three, but there’s also Nvidia reflex, which has been around for a couple of years, but now it’s fully baked into the DLS S3 because that will reduce the latency.

To what extent does it reduce latency? Does it mean that you don’t notice any difference at all? We’ll have to see. I suspect this isn’t going to be something esports gamers will want to use because, no doubt, it will increase latency a bit. Still, the reflex is kind of the unsung hero of NVidia’s innovation over the last few years because it removes the render queue by syncing the output of the CPU and the GPU, which then drops system latency. I’ll tell you, I’ve tried Siege and OverWatch with and without reflexes, and honestly, it does make a difference. Sadly, DLS S3 is not backwards compatible with the 3000 Series cards, but Nvidia says that 35 games will support DLS S3 by October, with many more due in the new year.

It might be worth considering if you can get a discount on the 3000 series cards. Having said all that, if you can bag yourself an RTX 4090 for somewhat close to RRP prices, assuming scalpers don’t grab them all and it goes nuts, if you have a really beefy 4K setup or even an 8K TV that you want to game on and you want the best possible performance, then yeah, go for it in terms of the 4080s I think the higher-end 16-gig version is too expensive. They’re both too expensive really, but in between the two, I would go for the cheaper 12 gig version, and if you can pay more, go up to the 4090. I say that with the caveat that I haven’t tested them yet, and it may all change once we see benchmarks and reviews, but those are my initial impressions.

So what do you think? Will you be upgrading? Do you think they’re just ridiculously expensive? Or are you going to upgrade to another 3000 Series card?

Please share this article with your friends on social media, and help you know the TRUTH about Nvidia’s RTX 4090 & 4080.

This article is based on the personality of the reviews. You are responsible for fact-checking if the contents are not facts or accurate.

Title: The TRUTH about Nvidia’s RTX 4090 & 4080!